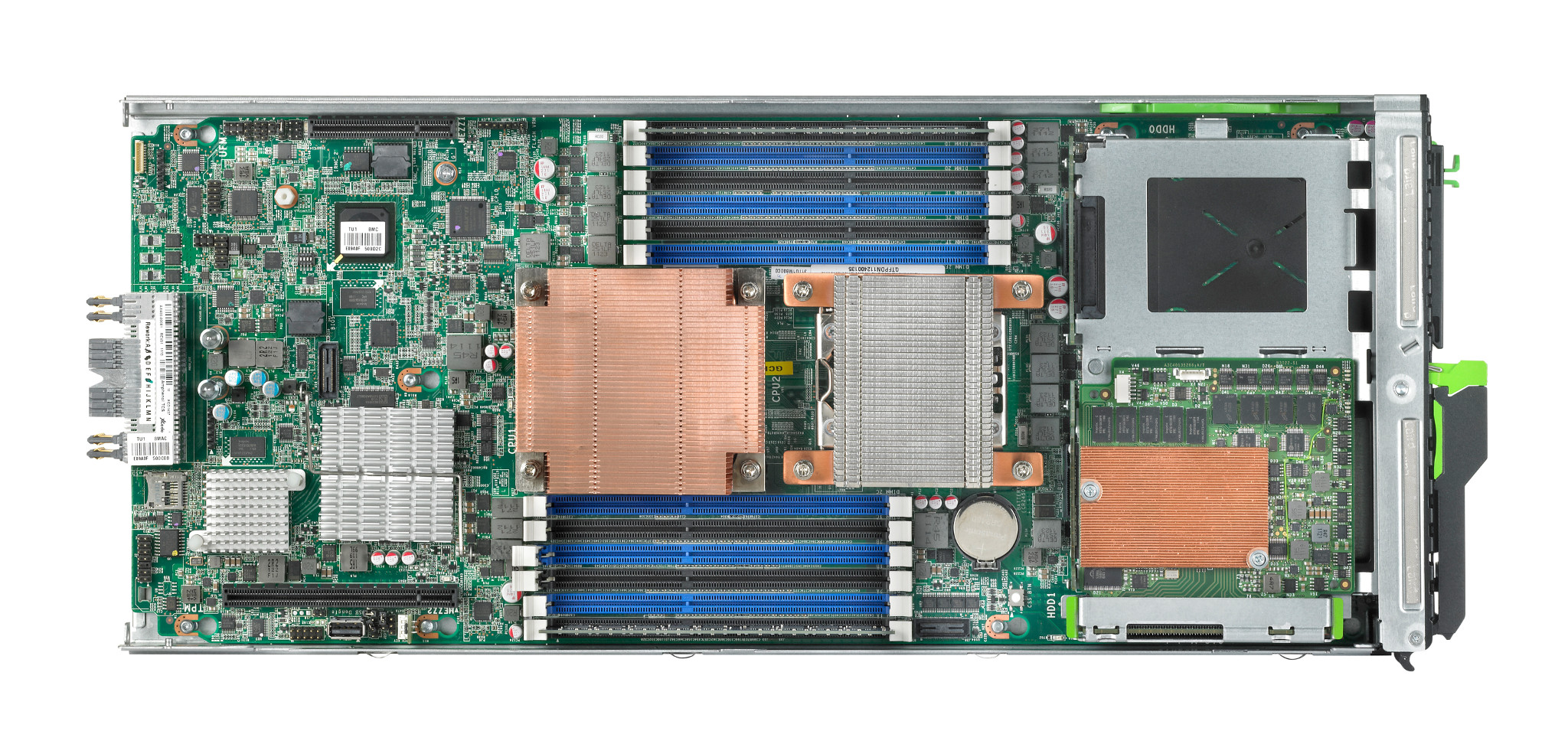

VIOM (Virtual-IO Manager) or VAUM as I like to pronounce it is Fujitsu's IO virtualization technology that is built into its PRIMERGY line of servers. The software integrates with the ServerView management suite and allows administrators to abstract the hardware from the network.

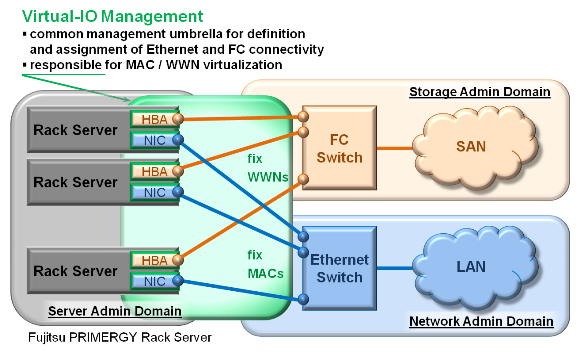

In the VIOM environment the IP (Internet Protocol) MAC (Media Access Control) addresses and storage WWN (World Wide Name)/WWNN (World Wide Node Name)/WWPN (World Wide Port Name) addresses from the physical HBAs (Host Bus Adapters) in the individual machines are masked and addresses are issued from a pool of virtual addresses instead.

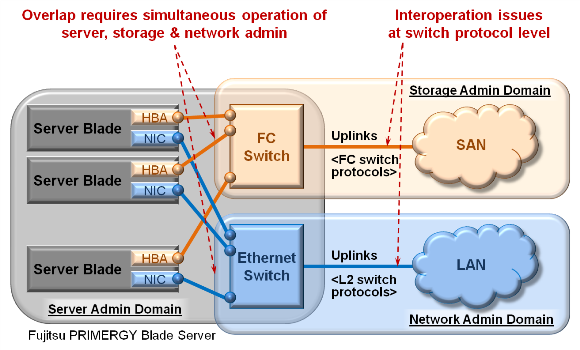

Essentially, what this means is that IT staff can assign LAN and Storage addresses from a pool of virtual addresses provided by the VIOM software. Standard practice today is to do the zoning and the LUN (Logical Unit Number) mappings on the disk array using the manufacturer provided addresses that are baked directly into the HBAs. The problem with this approach is that hardware failures will require several changes to completely rectify. For example, if the HBA fails and is replaced the host group mappings on the arrays need to be updated as well as the zoning on FC (Fibre Channel) switches. The same would also apply for a NIC (Network Interface Card) failure in an iSCSI (Internet Small Computer System) network.

With VIOM you create a pure separation between the IP network/Storage Area Network (SAN) layers and the server layer of the architecture. This results in a simplification of operation and maintenance activities since a failure in one part of the stack does not trickle down resulting in modifications in other layers. When a HBA fails or a server is replaced changes are no longer needed in the network or in the storage fabric. In this new architecture the IO of the compute nodes are virtualized and represented by profiles which can be transparently applied to any compute node which will then assume the previous nodes characteristics including I/O addresses.

Compute nodes are therefore treated as commodities. When they are switched out due to failures or hardware components are replaced the profiles ensure that no changes need to be made to the other layers and the IP routes and SAN LUNs et cetera are automatically preserved and presented to any node that is associated with the profile. In this way entire servers can serve as hotspares increasing resiliency and providing high availability to the services being offered.

Below is a step-by-step guide going beyond the theory above to demonstrate what configuring this actually looks like in practice using server blades in a BX900 chassis.

Using VIOM

The first thing to do once the software is installed on the management station is to launch ServerView.

-

To access ServerView navigate using the browser to the following address https://

:3170/ServerView -

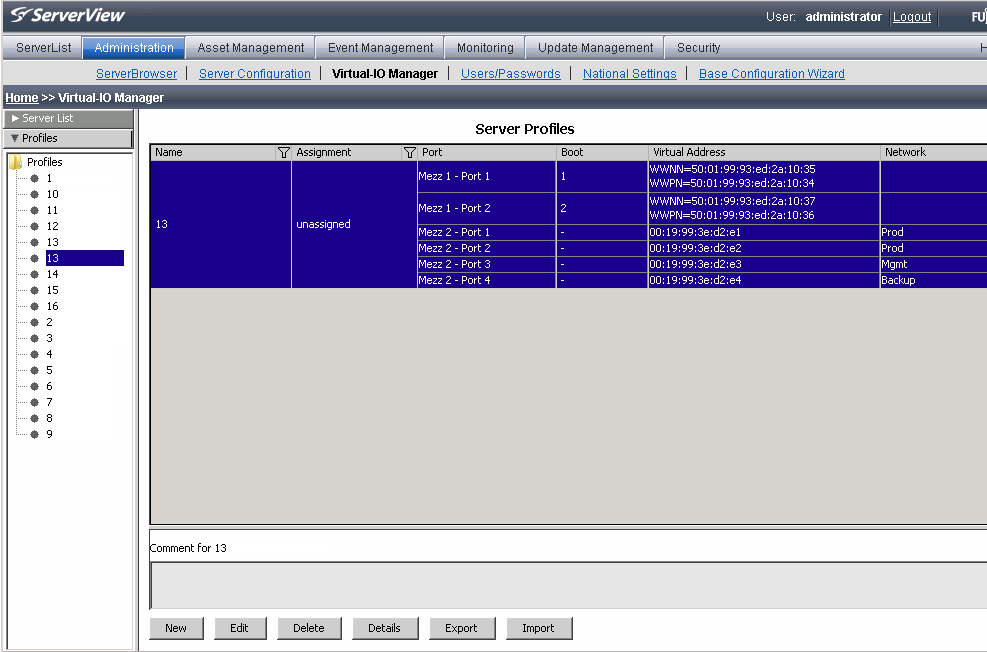

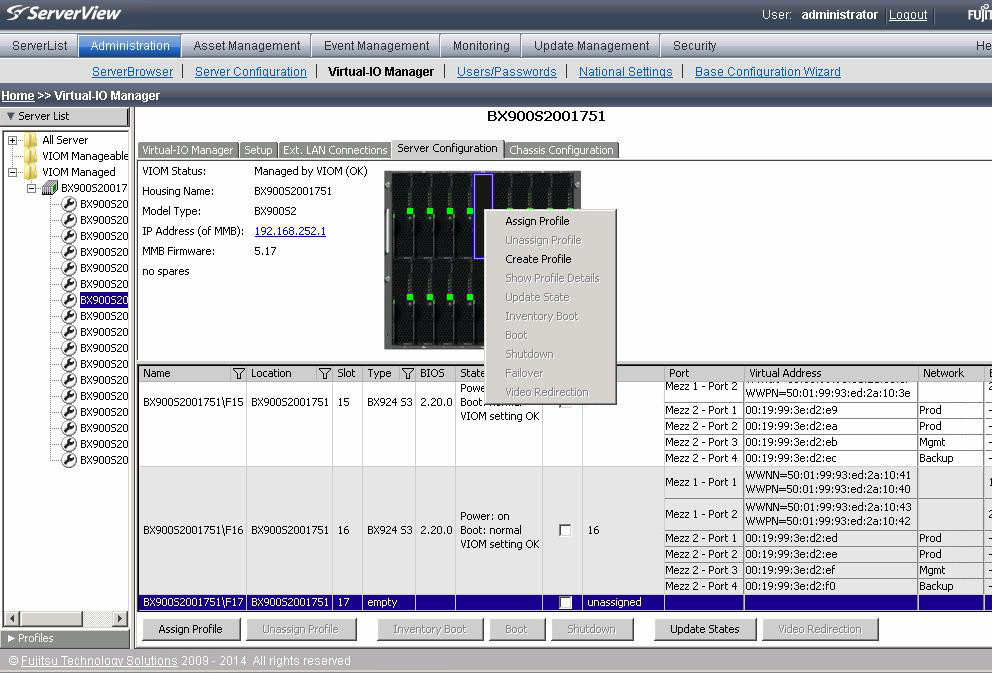

Select Adminstration Tab -> Virtual IO Manager

-

You will now be inside VIOM. Click the Profiles tab to the bottom in the tree plane on the left

-

Existing profiles will be shown in the tree pane. To create a new one click the New button at the bottom of the screen to create a new profile

-

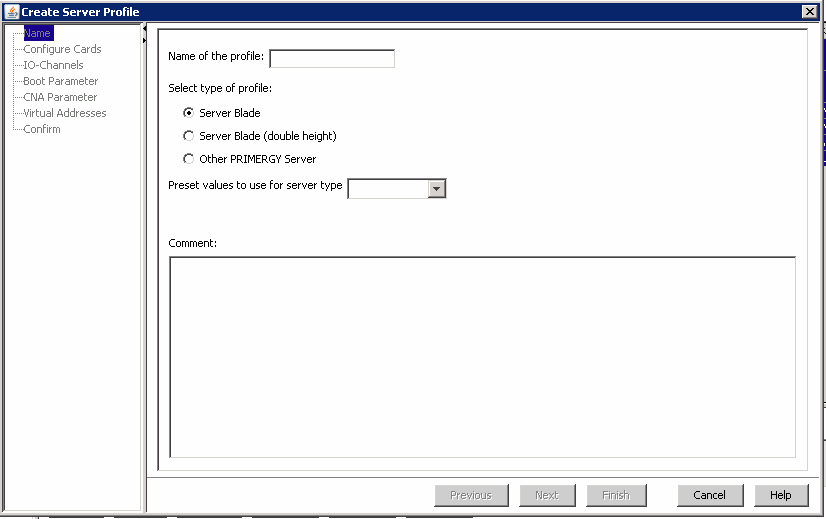

Enter the details in the create profile window and click confirm

-

Before a profile can be assigned to a machine that is already booted and running it must be in the powered down state. If this is a blade power it off from the chassis management.

-

Right click the powered down blade and click Assign Profile

-

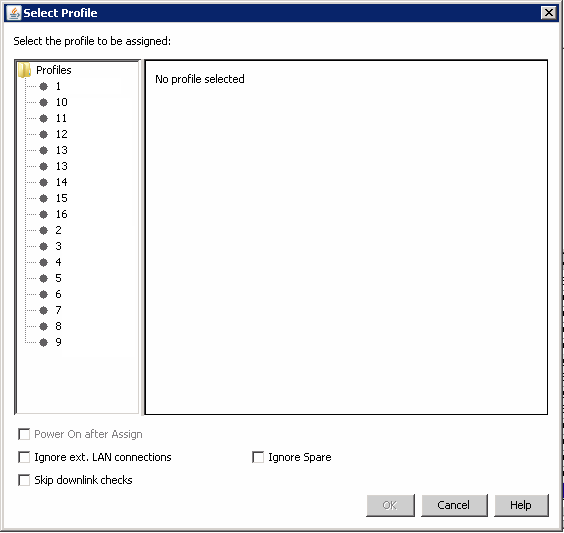

A dialog box will be shown with the available profiles. Select the appropriate profile and click OK

That is all there is to it. Just like that you got VIOM. Simple. From now on those blades will run and utilize the virtual addresses for all their I/O tasks.

They can be booted from the SAN and the admins can be comforted by the fact that in the future, should they fail, no changes will be necessary. The replacement compute nodes will use the existing MAC address and World Wide Names. To read more guides like this one take a look at our Tutorial section.

Nneko Branche.

Nneko Branche.