tl;dr According to the annual Stack Overflow Developer Survey, MongoDB is the #1 database technology that developers want to learn the most in 2020. Not only is it the most wanted but it is also in the top 5 most loved database category as well. A position that the database has held for 4 straight years. Given its wide language support, speed, it's flexibility yet simplicity and power provided by it's NoSQL document database model combined with its excellent documentation it is clear to see why this is the case. Therefore, it is no surprise that Mongo is a rising star amongst databases.

Having myself recently tried Mongo by giving it a test run on a small project I can also certainly personally confirm that this platform is indeed loved by developers. My experience did reinforce the sentiments captured by the 65K+ respondents of the Stack Overflow survey.

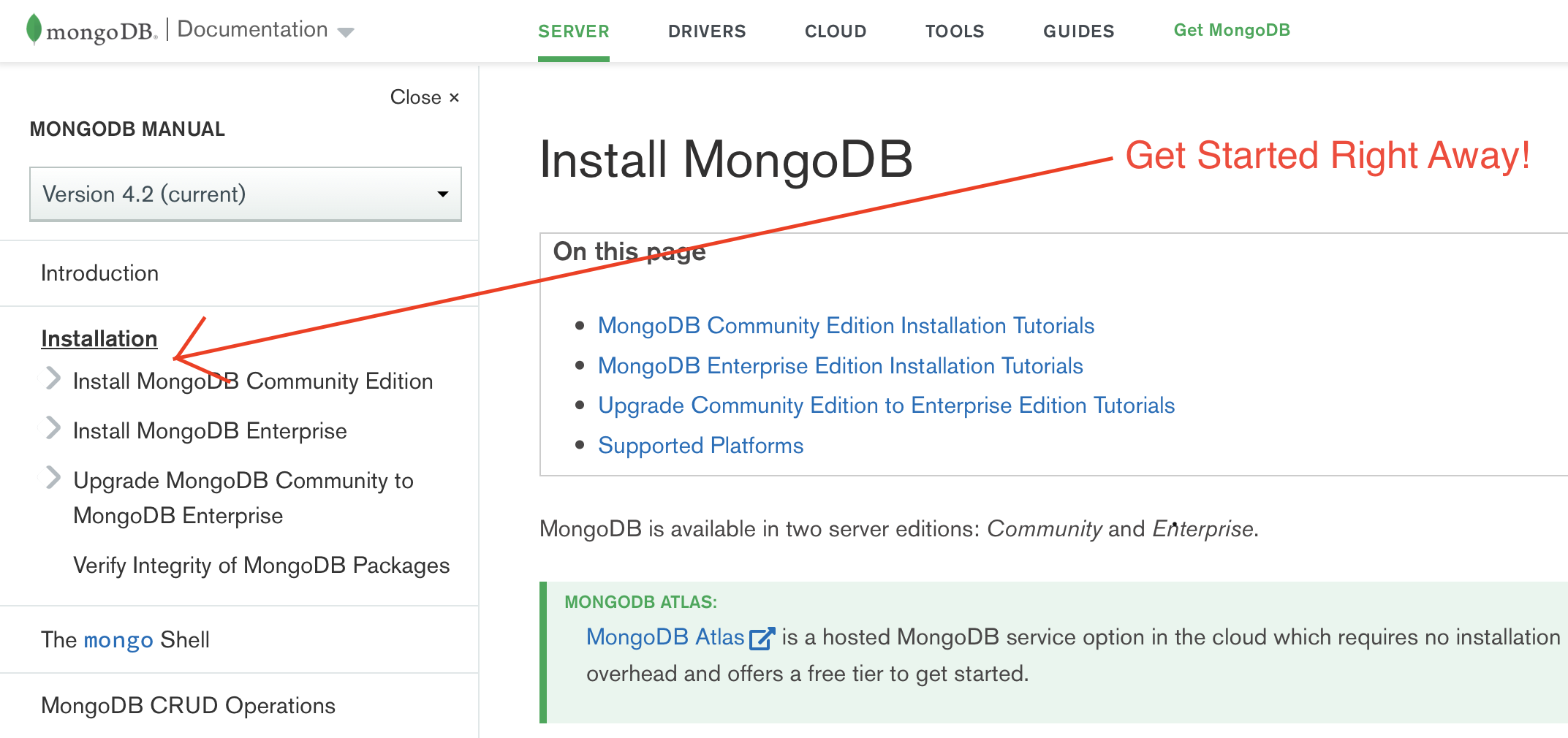

The setup of MongoDB was simple and the team has invested the time to write documentation that gets straight to the point and is easy to understand.

Contrast this to my experience with CouchDB where I had to spend a night reading the theory and being lectured on database history before I could even install the application let alone even perform the first insert. To be fair, the eventual installation of Couch was a breeze and it comes with a built in Web GUI as opposed to Mongo with only a CLI. Nevertheless, the unfriendliness of the documentation provided by CouchDB still stands out in my mind.

Notwithstanding, this post isn't about heaping more praise on Mongo but instead is about where it can improve its introduction to newbies like myself. Nor do I wish to repeat the problem of the CouchDB docs by taking too long to get to the point myself.

Why the need for Promises in MongoDB

As mentioned, despite my love for Mongo there is one area where I felt that they didn't do a very good job of explaining and providing adequate example code for developers to get started with. That area is how to utilize JavaScript Promises with their own supported database driver, MongoDB Driver, for Node.js.

Node.js is built around the concept of non blocking I/O called by asynchronous code triggered from an event loop and hooked back into it via a callback function. As a consequence, the Node.js MongoDB driver also followed this concept and implemented the callback pattern commonly found in JS code that runs on Node.js. To be clear the Callback pattern is still supported but it has the downside of creating deeply nested functions i.e A callback with a callback within a callback etc that can be difficult read let alone to troubleshoot.

While these callbacks are still the foundation and writing code this way is fine and still supported, newer versions of JavaScript have added additional mechanisms to the language syntax that makes working with branching code easier to mentally model and read. This is in the form of Promises as well as the Async, Await statements. Mongodb in their recent driver versions have also implemented these language features to provide developers the ability to make their code appear sequential for easier mental modeling and readability using the new Promise concept from JavaScript ES6 onwards. So let's delve into an example of how to do this.

First things, First. Creating a Persistent Database Connection

...

const dbDriver = require('mongodb')

let database = {}

let dbClient = dbDriver.MongoClient(dbURI, {

useUnifiedTopology: true,

useNewUrlParser: true

})

let dbo = false

database.getObjectId = (id) => {

return dbDriver.ObjectID(id)

}

database.connect = () => {

return new Promise(async (resolve, reject) => {

try {

await dbClient.connect()

dbo = dbClient.db(<Enter Name of Database Here>)

if (!dbo) throw new Error('Unable to connect to database'); else resolve(dbo)

} catch (e) {

reject(e)

}

})

}

database.get = () => dbo

database.close = () => {

return new Promise(async (resolve, reject) => {

try {

await dbClient.close()

resolve()

} catch (e) {

reject(e)

}

})

}

module.exports = databaseThe code above creates a module that will manage our connection for us (think of it as a similar idea to creating a single connection pool) by opening the database using the new useUnifiedTopology: true.

Using the example in this module, our database is stored as an object called dbo that is retrieved via the .get() method and it holds the persistent session for the database. We can then require this module and get a connection to the database by importing it in our other application files and modules when access to data and the resources stored in the database are required.

In particular, this approach provides the details on a more realistic and complex scenario not covered by the documentation. The standard documentation and guides for Mongo focus on examples where everything is being done in a single script where the connection is created by the client and destroyed at the end of an operation in the method's callback by executing the db.close(). However, this is not an ideal or realistic scenario in practice as in most instances an application will make many requests to the database and from multiple modules potentially simultaneously and you would not want to be initiating and then destroying the connection each time due to the performance impact. Additionally, and even potentially more dangerous is the fact that calls to the database are asynchronous in Mongo and therefore one would also run the risk of closing a connection before all the threads have completed their transactions and are finished using the handle to the database.

By managing the connections through a single module we are in effect recycling/pooling this connection as is intended by the new Unified Topology. A few benefits to this approach are we only have a single place to troubleshoot connectivity bugs and also it should be more performant and conserve memory and network resources overtime as opposed to creating and tearing down a connection each time a database operation is executed.

For a much more detailed explanation and further information on using this approach read this blog post from terlici.com by Stefan and Vasil.

What is missing from the previous code example is a direct call to close the connection from within the app's main function which should capture the SIGTERM/SIGINT event and call the close() function of this module to properly terminate the database connection and release resources.

Closing Database Connection at the end of Program Execution

...

const exit = () => {

server.close(async () => {

await db.close()

process.exit(0)

})

}

process.on('SIGINT', exit)

process.on('SIGTERM', exit)Above is an exit function that is passed to the Node.js process on the SIGINT or SIGTERM signals on Unix based systems. For Windows you would need to capture another event to capture the close action.

Now to Promises, Async and Await

If you have read the code above to close connections closely then you would notice the await keyword prior to the call of db.close() which is wrapped in an anonymous function prefaced by the aysnc keyword. Essentially, async indicates to the JS interpreter that the function being defined will run asynchronous code and that this code will be highlighted by the placing of the await statement before a function call.

Once an asynchronous function is called after the interpreter is told to wait by the await keyword then it will pause the execution of all statements until the function completes and returns. Thus, even though the code is asynchronous the code flow is now sequential.

Async and Await are syntactic sugar actually for Promises. Promises are special objects that can be returned by functions and contain .then and .catch methods which take functions that are called depending on whether the Promise is resolved (kept) or rejected (denied). Only for functions that return a Promise will the interpreter await the calls to complete before continuing code execution. To handle a promise without using the then and catch methods all that is needed is required is to tell the interpreter that this is an async function before the function prototype. Also if there is a chance that the promise may be rejected then enclose the function that may throw an error in a try and catch block to execute the action on failure.

When converting from the callback pattern to a the creation of a function that returns a Promise all that is required is to wrap our previous logic inside the Promise Object which takes as an argument a single function that accepts to two functions as input. These two functions are called resolve and reject by convention. The syntax looks like the following:

return new Promise((resolve, reject) => {

...

try {

...

return resolve()

} catch (e) {

return reject(e)

}

}It really is that simple.

And, fortunately for us, the MongoDB Driver supports the use of promises and will simply return a Promise when the methods of the MongoClient are called without specifying a callback function i.e whenever the callback function is left as undefined.

That's it! That is all you have to do to pool a connection and utilize Promises with MongoDB and Node.js.

Nneko Branche.

Nneko Branche.